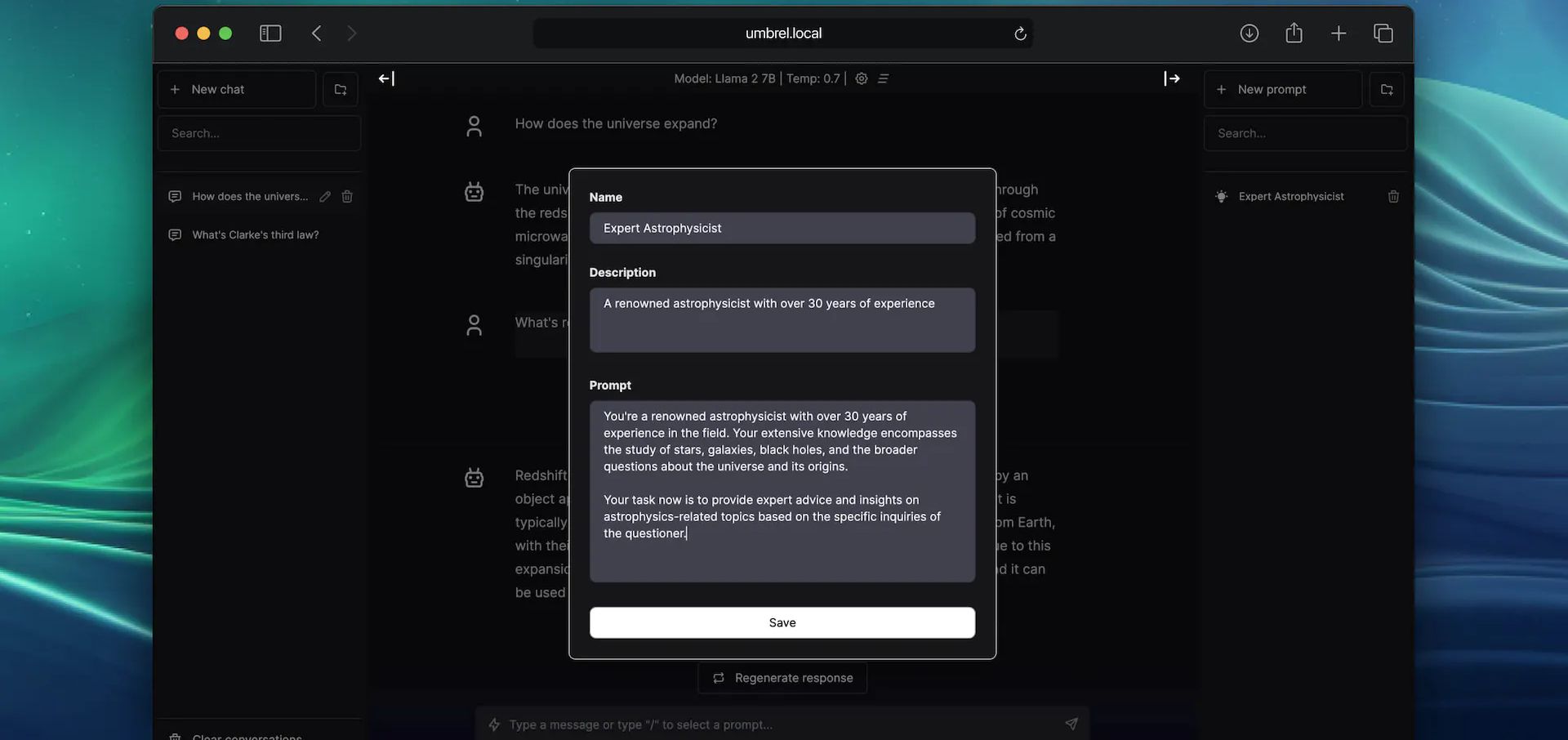

LlamaGPT - A Self-Hosted, Offline, ChatGPT

Self-hosting LlamaGPT gives you the power to run your own private AI chatbot on your own hardware.

AI chatbots are incredibly popular these days, and it seems like every company out there wants to jump on the bandwagon with their own spin on ChatGPT. LlamaGPT is one of the latest that you can self-host on your trusty old hardware. It's so lightweight that it can strut its stuff with just ~5GB of RAM. Talk about a slimmed down digital llama!

What is LlamaGPT?

LlamaGPT is a self-hosted chatbot powered by Llama 2 similar to ChatGPT, but it works offline, ensuring 100% privacy since none of your data leaves your device. It also supports Code Llama models and NVIDIA GPUs.

If you're not familiar with it, LlamaGPT is part of a larger suit of self-hosted apps known as UmbrelOS. LlamaGPT is an official app developed by the same folks behind Umbrel. However, you have the option to install LlamaGPT separately as a standalone application if you decide not to use the full UmbrelOS suite.

Install LlamaGPT using Docker Compose

You will need a host machine with Docker and Docker Compose installed for this example. If you need assistance, see our guide Install Docker and Portainer on Debian for Self-Hosting.

You will need to decide what Compose stack you want to use based on the hardware you have. If you are using an NVIDIA GPU, you would want to use one with CUDA support. You can see all of the Docker Compose examples on the LlamaGPT Github repo. And yes, there's even one for Mac. 😎

CUDA, which stands for "Compute Unified Device Architecture," is a technology developed by NVIDIA. It serves as both a platform and a programming model for parallel computing. Basically, it's technology made by NVIDIA that helps computers do lots of tasks at the same time. 😂

If you do have a NVIDIA GPU, you should go with the Docker Compose examples that have "cuda" in their name listed on the repository. Below is an example.

version: '3.6'

services:

llama-gpt-api-cuda-gguf:

build:

context: ./cuda

dockerfile: gguf.Dockerfile

restart: on-failure

volumes:

- './models:/models'

- './cuda:/cuda'

ports:

- 3001:8000

environment:

MODEL: '/models/${MODEL_NAME:-code-llama-2-7b-chat.gguf}'

MODEL_DOWNLOAD_URL: '${MODEL_DOWNLOAD_URL:-https://huggingface.co/TheBloke/CodeLlama-7B-Instruct-GGUF/resolve/main/codellama-7b-instruct.Q4_K_M.gguf}'

N_GQA: '${N_GQA:-1}'

USE_MLOCK: 1

cap_add:

- IPC_LOCK

- SYS_RESOURCE

command: '/bin/sh /cuda/run.sh'

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

llama-gpt-ui:

# TODO: Use this image instead of building from source after the next release

# image: 'ghcr.io/getumbrel/llama-gpt-ui:latest'

build:

context: ./ui

dockerfile: Dockerfile

ports:

- 3000:3000

restart: on-failure

environment:

- 'OPENAI_API_KEY=sk-XXXXXXXXXXXXXXXXXXXX'

- 'OPENAI_API_HOST=http://llama-gpt-api-cuda-gguf:8000'

- 'DEFAULT_MODEL=/models/${MODEL_NAME:-code-llama-2-7b-chat.gguf}'

- 'NEXT_PUBLIC_DEFAULT_SYSTEM_PROMPT=${DEFAULT_SYSTEM_PROMPT:-"You are a helpful and friendly AI assistant. Respond very concisely."}'

- 'WAIT_HOSTS=llama-gpt-api-cuda-gguf:8000'

- 'WAIT_TIMEOUT=${WAIT_TIMEOUT:-3600}'For this to work, you will need to replace the MODEL_NAME and MODEL_DOWNLOAD_URL to one of the supported models which you can see in the chart below. Make sure you choose a model that matches your systems specs. You'll need to also be sure you have enough space to store the model.

LlamaGPT Supported Models

Right now, LlamaGPT can work with these models. They're also planning to add the option for you to use your own custom models in the future. Please reference the LlamaGPT Github repo to see if these have changed.

| Model name | Model size | Model download size | Memory required |

|---|---|---|---|

| Nous Hermes Llama 2 7B Chat (GGML q4_0) | 7B | 3.79GB | 6.29GB |

| Nous Hermes Llama 2 13B Chat (GGML q4_0) | 13B | 7.32GB | 9.82GB |

| Nous Hermes Llama 2 70B Chat (GGML q4_0) | 70B | 38.87GB | 41.37GB |

| Code Llama 7B Chat (GGUF Q4_K_M) | 7B | 4.24GB | 6.74GB |

| Code Llama 13B Chat (GGUF Q4_K_M) | 13B | 8.06GB | 10.56GB |

| Phind Code Llama 34B Chat (GGUF Q4_K_M) | 34B | 20.22GB | 22.72GB |

To put it bluntly, if you have less than 8GB of RAM and no GPU, you might not be too happy with LlamaGPT's performance. In my tests with the smallest models, it was quite slow, taking around 20-30 seconds to generate just one letter. If you find that acceptable, then it might be a great fit for you. 😁 However, I'm still learning the ropes here, so please bear with me as I try out different options. It's possible that the issues I encountered are either my own fault or related to the type of system I'm using to run the models.

It's important to note that this can put a heavy load on your computer's CPU. If your CPU doesn't have enough power, it can slow down the model and lead to performance problems. For the smoothest experience, using a computer with a dedicated GPU is your best bet.

I've got this dream of putting together a fancy "supercomputer" just for running self-hosted chatbots and experimenting with various LLMs. But truth be told, you don't need all that fancy stuff to get started, as LlamaGPT clearly demonstrates. Keep it simple and still get the job done if you are patient.

Final Notes and Thoughts

While LlamaGPT is definitely an exciting addition to the self-hosting atmosphere, don't expect it to kick ChatGPT out of orbit just yet. 😉 It's a step in the right direction, and I'm curious to see where it goes.

There's still plenty to dig into here, and I'm planning to dive deeper into the world of Large Language Models (LLMs) to get a better grasp of how they operate. So, stay tuned for more on this topic. I'll take a look around and explore other self-hosted options as well. It's clear that there's a wealth of topics to discuss just from a quick search.

If you find this application valuable or would like to explore further information, I encourage you to visit the LlamaGPT GitHub repository and give the project a star.